-

ollama api 사용해 보기AI/Llama 2024. 9. 2. 10:16

offical docs

https://github.com/ollama/ollama/blob/main/docs/api.md

ollama/docs/api.md at main · ollama/ollama

Get up and running with Llama 3.1, Mistral, Gemma 2, and other large language models. - ollama/ollama

github.com

running model

http://localhost:11434/api/ps

List local models

http://localhost:11434/api/tags

post /api/show

curl http://localhost:11434/api/show -d '{"name": "llama3.1"}'/api/generate

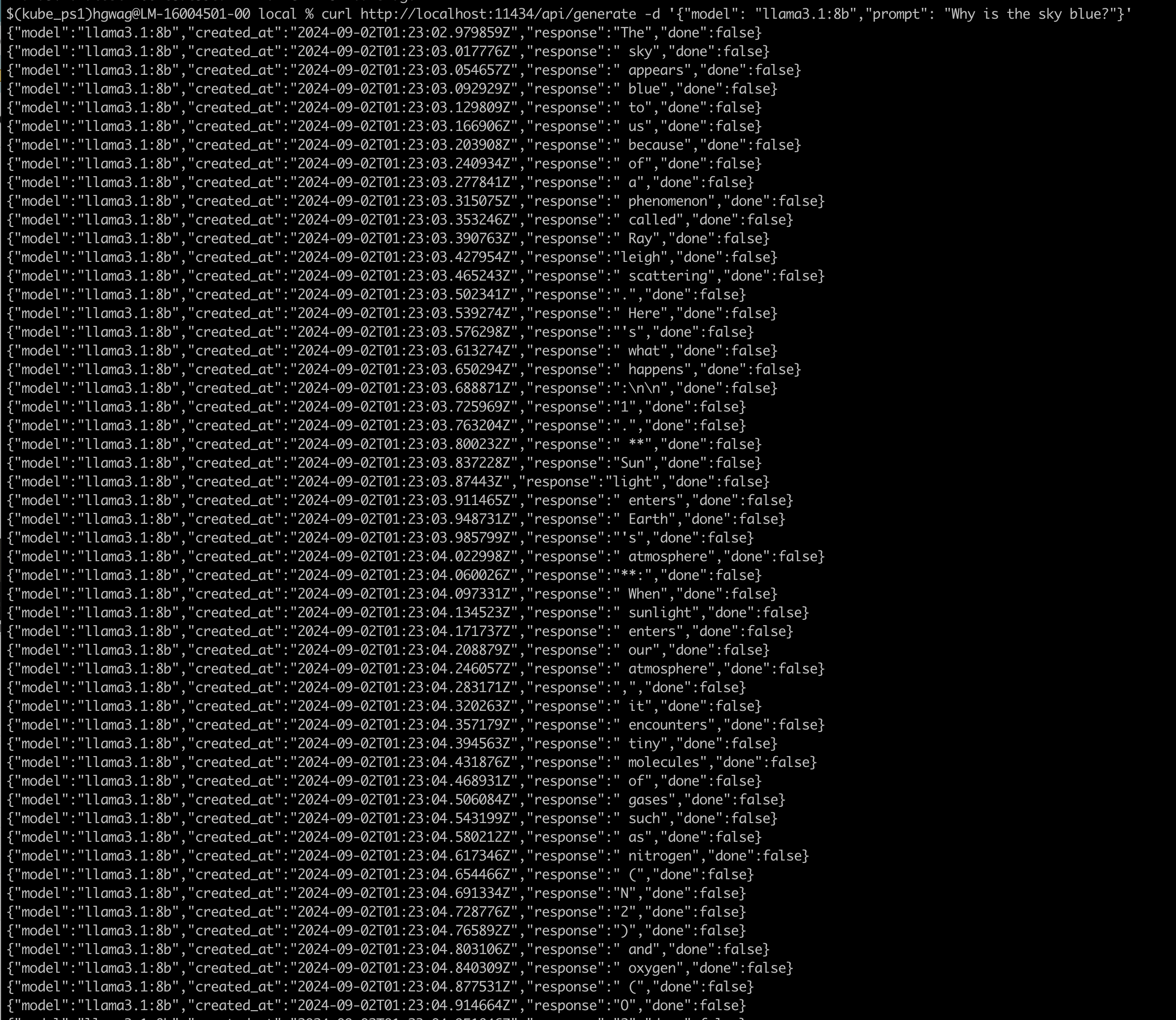

curl http://localhost:11434/api/generate -d '{"model": "llama3.1","prompt": "Why is the sky blue?"}'답변이 너무 긺

무슨 문제가 있나?

아래처럼 stream false 에 json 지정해야 짧아짐

curl http://localhost:11434/api/generate -d '{ "model": "llama3.1", "prompt": "What color is the sky at different times of the day? Respond using JSON", "format": "json", "stream": false }' curl -X POST http://localhost:11434/api/chat -d '{"model":"llama3.1:8b","messages":[{"role":"user","content":"why is the sky blue?"}]}''AI > Llama' 카테고리의 다른 글

Llama 3.1 사용해 보기 (3) 2024.09.01